For years, the promise of the “AI PC” has echoed through the tech world, conjuring images of effortlessly intelligent machines. But the reality, until recently, felt more like a glimpse of potential than a tangible experience. These so-called AI PCs, often sleek laptops boasting a Neural Processing Unit (NPU), seemed to exist primarily as impressive demonstrations, failing to deliver on the revolutionary capabilities they hinted at.

The surprising truth? Local AI is not only here, it’s thriving. And it’s almost entirely independent of the NPUs that were supposed to drive its ascent. In fact, relying solely on an NPU might lead you to believe local AI has stalled. The most powerful and accessible tools aren’t utilizing NPUs at all – they’re harnessing the power of GPUs.

The grand vision of NPUs ushering in a new era of on-device intelligence has, so far, fallen remarkably short. While NPUs can handle certain tasks, the transformative AI experiences promised to consumers remain elusive. The marketing hype surrounding NPU-powered AI PCs has largely failed to translate into a compelling user experience.

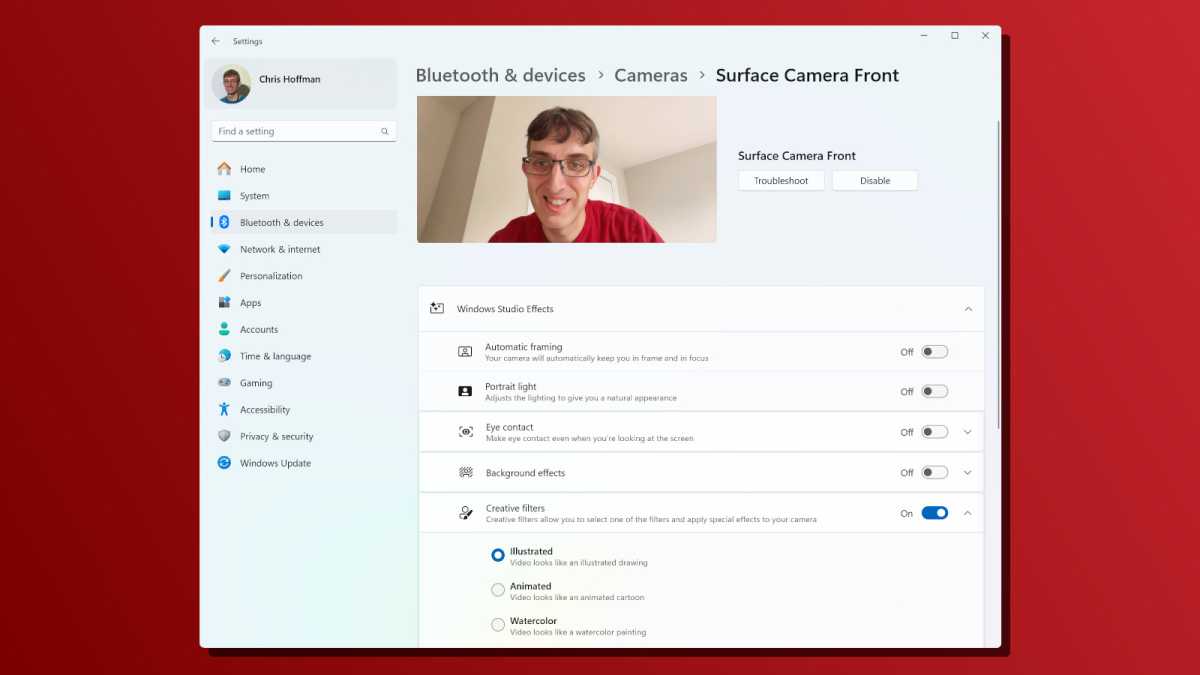

Consider the features currently available on “Copilot+ PCs,” like Windows Recall, which continuously captures screenshots of your desktop. Or the Photos app’s image generator, which often produces… less-than-stellar results. While Windows Studio Effects offer webcam enhancements and semantic search improves file finding, these are incremental improvements, a far cry from the powerful, game-changing AI experiences initially advertised.

Microsoft now appears to be acknowledging this disconnect. With the evolution of Windows ML, developers can now create AI applications that run on CPUs, GPUs, *and* NPUs. However, the core issue remains: the most popular and capable local AI tools are bypassing NPUs altogether, choosing instead to leverage the established power of GPUs.

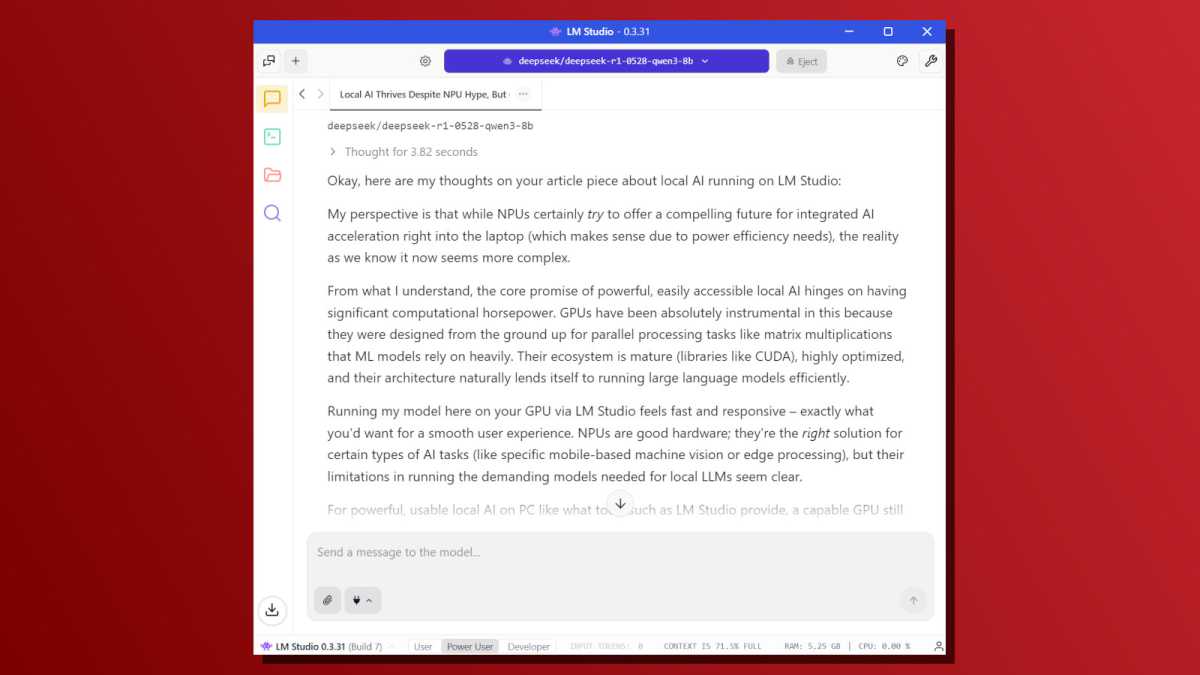

If you possess a gaming PC, you can unlock the true potential of local AI with tools like LM Studio. With a few simple clicks, you can run a Large Language Model (LLM) locally, engaging in AI-powered conversations entirely on your own hardware. This is the dream realized – a user-friendly experience that requires no technical expertise. It’s here, but not on the devices it was originally intended for.

Tools like Ollama and Llama.cpp, foundational for many other AI applications, also completely ignore NPUs. Why wasn’t more effort invested in integrating NPU support into these widely adopted, open-source platforms? A fraction of the marketing budget dedicated to “AI PCs” could have dramatically altered the landscape.

The current situation is stark: if you’re serious about local AI, avoid so-called “AI PCs” with NPUs. Invest in a gaming PC equipped with a powerful GPU, ideally from Nvidia, as most local AI tools are optimized for Nvidia’s CUDA architecture. This is where the real innovation is happening.

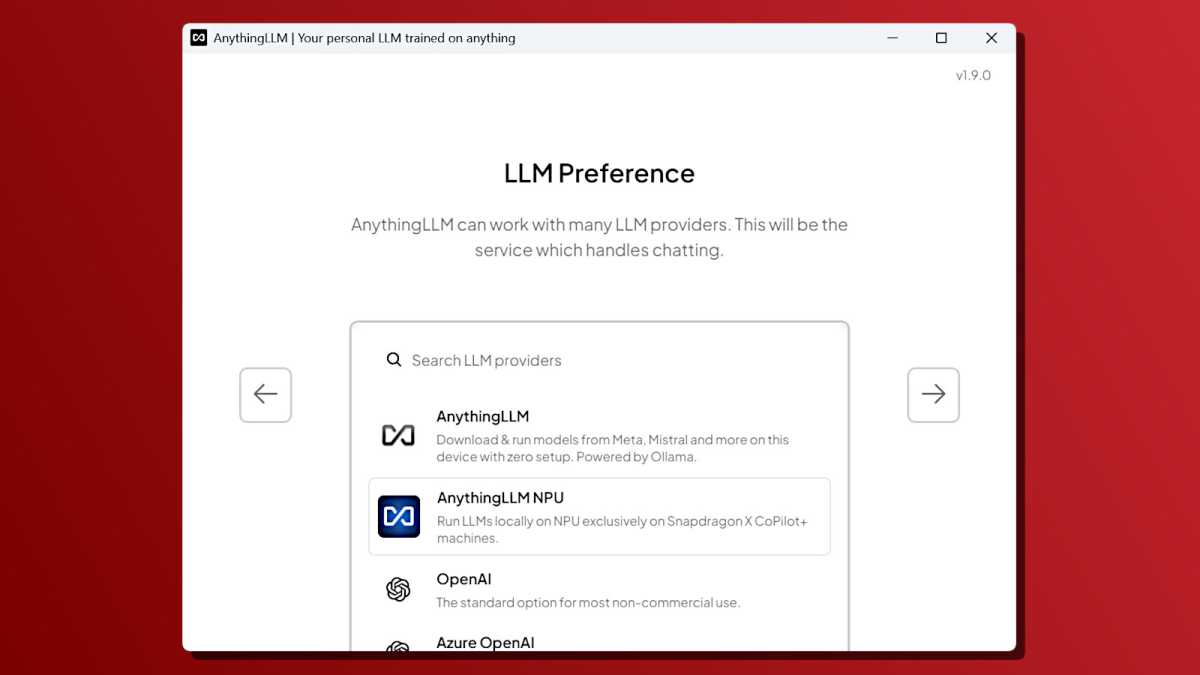

There is one exception: AnythingLLM, which offers an NPU backend for Qualcomm Snapdragon X systems. However, it’s a niche solution, triggering Windows security warnings and remaining largely outside the mainstream. It highlights a critical problem: the fragmentation of NPU support across different manufacturers – Qualcomm, Intel, and AMD – creating a significant barrier to adoption.

The original promise of NPUs was democratization – to make local AI accessible to everyone, regardless of budget or hardware. The idea was to offer a power-efficient alternative to expensive, power-hungry GPUs. But that vision has crumbled. Easy-to-use local AI tools require a powerful GPU. Lightweight laptops with NPUs offer limited functionality, confined to basic Windows features or obscure developer demos.

Microsoft’s strategy has inadvertently created two distinct local AI experiences. The NPU-powered Copilot+ PC offers a playground of limited tech demos, leaving users underwhelmed. Meanwhile, GPU-powered PCs unlock a world of open-source tools that Microsoft largely ignores. The result is a fractured ecosystem and a missed opportunity.

Microsoft has effectively told those actively exploring local AI that its built-in Windows features aren’t for them. These features are exclusively tied to NPUs, rendering them inaccessible to users with powerful GPUs. This disconnect has led to a rejection of Microsoft’s AI offerings in favor of independent, GPU-driven solutions.

The great NPU push ultimately amounted to a marketing campaign that failed to deliver on its promises. The future of local AI isn’t about waiting for NPUs to catch up; it’s about embracing the power of GPUs. If you seek a truly intelligent and responsive computing experience, prioritize a powerful GPU over the allure of the “AI PC.”