Microsoft has introduced a new device category with Copilot+. Only laptops with a dedicated Neural Processing Unit (NPU), at least 16 GB of RAM and a fast NVMe SSD fulfil the minimum requirements.

Intel is addressing these requirements with the Core Ultra series, which combines classic CPU cores with GPU acceleration and a hardwired NPU. This unit alone achieves 40 to 47 trillion computing operations per second – a device is only officially certified as a Copilot+ PC once it reaches this performance level. Put simply, if you want to use AI functions locally, you need a very fast computer with a chip specially optimized for AI.

Core Ultra processors with local AI acceleration

For modern AI applications, it is no longer just the clock rate that is decisive, but the internal distribution of tasks between the computing units. The Intel Core Ultra 7 268V has eight CPU cores and an integrated Intel Arc GPU.

The set-up is complemented by the NPU, which is specially designed for inference-based AI tasks. This allows text analyses, translations, video filters, image generation or language modelling to be carried out directly on the chip – without any detours via the cloud.

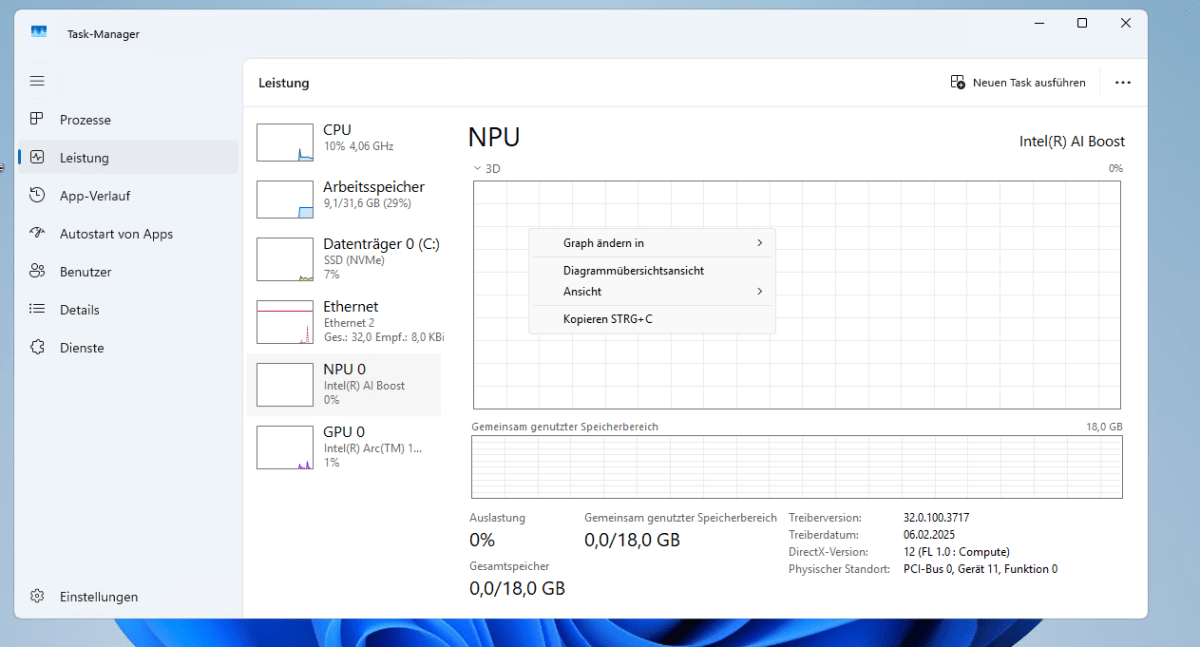

The Intel NPU cannot be configured separately; its activity is controlled via Windows components. Functions such as Recall, Cocreator or Windows Studio Effects access it automatically; there is no provision for manual assignment.

Intel

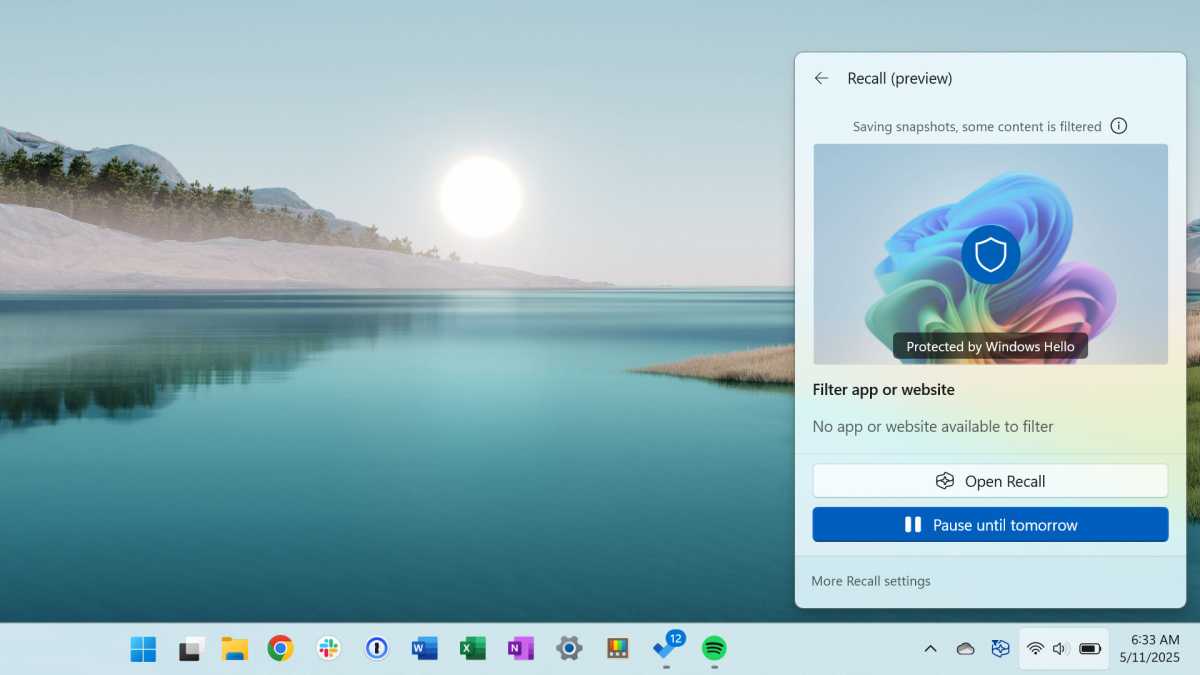

Copilot functions between benefit and control

The central innovation in Copilot is not the graphical user interface, but the shifting of AI processing to the device. The Recall feature continuously creates screenshots of all activities.

These can be searched through later using a text search. The feature works locally, stores content in encrypted form and can be deactivated for specific applications. However, users have no insight into the data model used.

Chris Hoffman / Foundry

The Live Captions function covers a special use case. It transcribes any audio signals in real time, regardless of whether they originate from a video, a video call or a locally played file. The Intel NPU takes over the continuous speech-to-text mapping at system level. The output takes place as an overlay directly above the respective window or as a separate bar at the bottom of the screen.

Unlike conventional subtitles, Live Captions is speech-agnostic, automatically recognizing speech input and converting it into readable text on-the-fly.

No internet connection is required. Translations can also be activated so that English-language content, for example, is automatically subtitled in German. If the NPU performance is sufficient, the delay remains minimal. The function can be used system-wide, which creates real added value, especially for people with impaired hearing or in noisy environments. Program-specific activation is not necessary. As soon as Live Captions are activated, the system analyses all sound sources. However, use is limited to the visible transcript. There is no storage or analysis beyond the current session.

Chris Hoffman / IDG

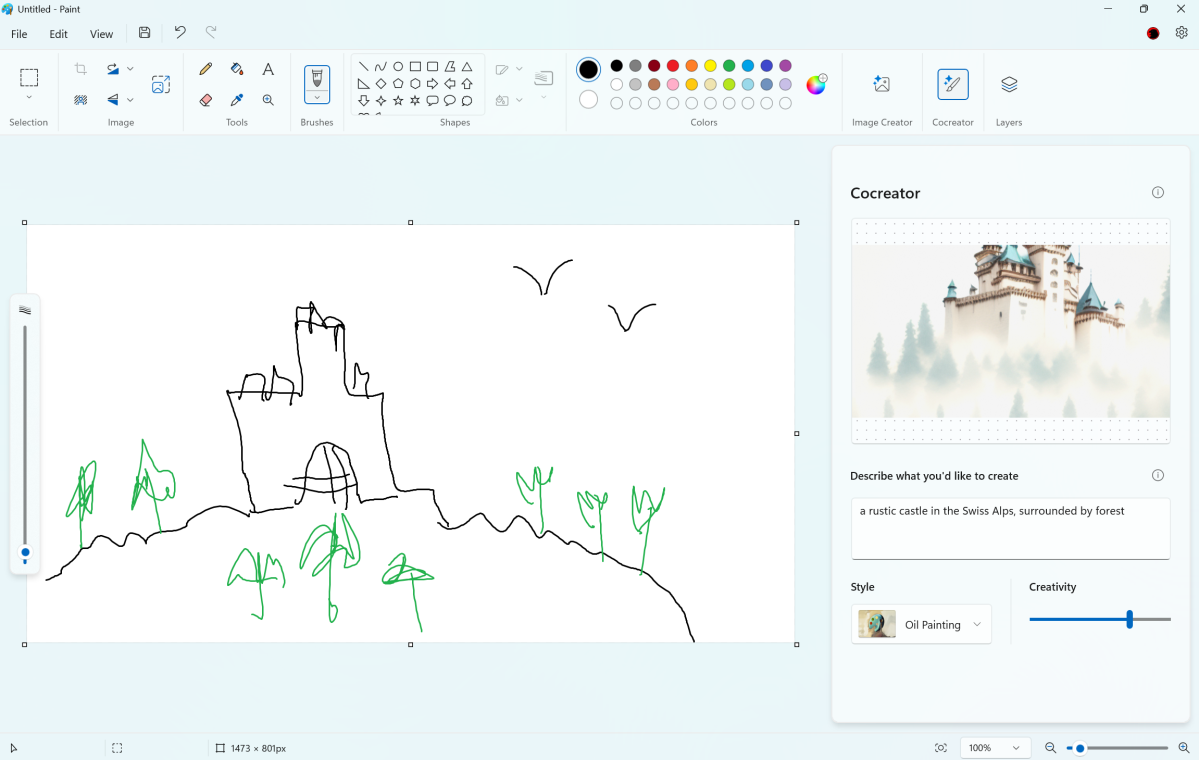

Local image synthesis with Paint Cocreator

The Cocreator function integrated in Paint makes it possible to create images from simple text input within a few seconds. This requires a Copilot-certified device with an active NPU. The underlying diffusion model runs locally and utilizes both the main memory and the dedicated NPU for image generation.

In contrast to cloud services, the entire process remains on the device. A short text-based prompt is sufficient to receive several image suggestions in low to medium resolution, which can then be edited directly in Paint. Users can influence the style, color scheme and complexity of the motif – but only within the specified limits.

The model itself remains a black box; external models cannot be integrated. Without an NPU, Cocreator is hidden or refuses to start due to insufficient system resources.

Mark Hachman / IDG

In addition to pure computing power, Intel Core Ultra notebooks have specialized technologies to optimize the use of AI in mobile operation. The so-called Dynamic Tuning Technology analyses temperature, usage behavior and energy profile in real time and automatically adjusts the distribution of loads to the CPU, GPU and NPU.

This is complemented by the Intelligent Display, which controls screen brightness, contrast and refresh rate in a context-sensitive manner, an aspect that can bring tangible benefits in terms of battery life in battery mode. This technology also proves useful for longer video conferences or remote working scenarios, as it relieves the thermal management and optimizes visibility at the same time.

The NPU also benefits from the fact that the reduced GPU load creates more thermal headroom for inference tasks. Inference-based processes refer to tasks in which an AI makes decisions or delivers results based on an already trained model. In other words, the actual learning, i.e. the training, has already been completed. During inference, this knowledge is used to process new input.

One example would be the automatic subtitling of videos using live captions. The AI has previously been trained with huge language data sets. During inference, it recognizes what someone is saying in real time, converts speech into text and displays it directly on the screen.

To do this, it does not reuse the entire training material, but instead uses compact, optimized models. Crucially, inference processes can be executed locally on the device, provided a dedicated accelerator such as an NPU is available. This means that data remains on the notebook and AI reactions are almost instantaneous.

Energy efficiency and system behavior under load

One advantage of the Intel architecture is the thermal separation of the computing units. CPU, GPU and NPU do not compete directly for power reserves. The NPU only handles inference-based processes. This significantly reduces power consumption when AI features are active.

Tests with the Core Ultra 9 285K show up to 25 per cent better efficiency compared to the Core i9 14900K. The battery life benefits noticeably from this shift. Systems such as the Lenovo Yoga Slim 7i Aura Edition achieve up to 22 hours of video playback without any loss of performance in AI processes. The decisive factor here is the continuous support by BIOS and Windows. Without the latest UEFI update, the NPU is often underpowered.

In addition to the Lenovo Yoga Slim, other manufacturers already offer devices that are explicitly suitable for local AI applications under Windows 11. The Acer Swift Go 14 relies on the Intel Core Ultra 7 155H, combined with an NPU that delivers over 40 trillion operations per second.

Thomas Joos

It is worth taking a look at the specific device basis, as not every laptop advertised as a “Copilot” fulfils the same requirements. Notebooks with Intel Evo certification and Core Ultra 200V processors undergo a validation program that is tailored to real-life usage scenarios. Not only high performance and long battery life are required, but also fast reactivation, smooth multi-monitor connection via Thunderbolt 4 and consistent behavior when using several applications at the same time.

For Copilot, this means that functions such as Recall, Live Captions and Cocreator run without any loss of performance, even when switching between mains and battery operation. Evo-certified devices such as the Acer Swift Go or the Surface models from the business segment are thus exemplary for this new class of hybrid AI PCs, which combine everyday usability and technical sophistication.

YouTube / Microsoft

Intel divides the Core Ultra Series 2 into several model lines that have a direct impact on the field of application of mobile systems. The V models serve as a reference for slim premium devices and impress with their high efficiency and strong NPU performance.

H models are aimed at classic high-end notebooks for productive applications with medium mobility requirements. U models are optimized for lightweight, ultra-mobile systems with reduced energy requirements and AI functions. HX models represent the upper performance spectrum with up to 24 physical cores and are primarily intended for workstations, gaming laptops and professional creative environments.

V and H models are particularly suitable for everyday use in AI-supported Windows applications, as they offer a good balance between battery life, thermal budget and inference performance of the NPU.

What Intel NPUs can do and what is not visible

The practical use of the NPU is tied to the respective features in Windows. Independent programming or execution of user-defined models is not possible with on-board resources. Intel does provide developers with APIs, but in everyday life these paths are not accessible to users without programming knowledge.

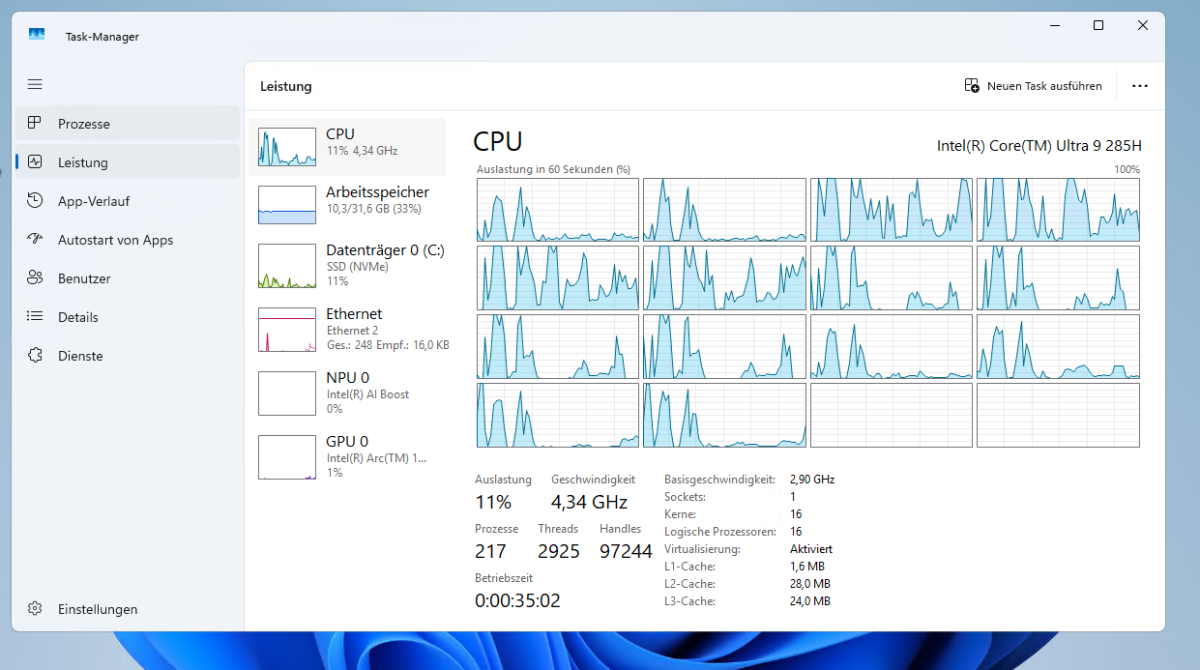

The visibility of NPU utilization is limited to indicators in the task manager or via third-party tools. For power users, it remains unclear which processes are running on which unit and when. Control remains in the hands of the operating system. A separation of CPU and NPU responsibilities can at best be indirectly tracked via utilization profiles.

Thomas Joos

Intel offers additional functions for corporate use with the vPro platform based on the Core Ultra series. In addition to the integration of security-relevant features such as hardware-based identity verification, the NPU can also be used directly by security software to analyze threats locally and detect behavioral anomalies at an early stage.

In addition, vPro Device Discovery supports detailed queries on installed components, energy profiles and configuration states. In combination with Copilot functions, this creates devices that offer a high degree of transparency and control for both IT administrators and users in regulated industries – without direct access to the underlying models.

Bottom line

With an Intel Core Ultra 200V series device, you gain access to a clearly defined group of AI functions. These run locally, do not require an internet connection and benefit directly from the NPU. However, control over computing execution remains limited: Windows automatically determines which unit becomes active, while the setting options only allow rudimentary intervention.

For productive use, this primarily means a gain in efficiency – but not deeper system control. The new device class is therefore more of a platform than a toolbox. Those looking for precise AI customizations will quickly reach their limits. On the other hand, those who rely on stable, locally executed features will find a convincing hardware basis in the Intel-based Copilot devices.